- News

- Reviews

- Features

- Festivals

- Box Office

- Awards

- Subscribe

Subscribe to Screen International

- Monthly print editions

- Awards season weeklies

- Stars of Tomorrow and exclusive supplements

- Over 16 years of archived content

- Global production

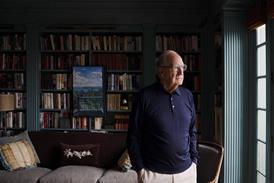

Pressman Film CEO Sam Pressman and Matthew Tierney talk filmmaking and AI

By Jeremy Kay2023-06-10T22:02:00

Source: Pressman Film

“The only way to really understand what it is is to play with it.”

SIGN IN if you have an account

Do you want to keep reading?

Register for free access to five articles a month

Subscribe today and unlock access to:

- Unlimited film & TV news, reviews and analysis on Screendaily.com

- All print and/or digital editions of Screen magazine

- Breaking news alerts sent straight to your inbox

- Digital festival and market dailies

- Weekly awards magazines

Access premium content Subscribe today

If you have an account you can SIGN IN now

Subscribe to Screen International

Screen International is the essential resource for the international film industry. Subscribe now for monthly editions, awards season weeklies, access to the Screen International archive and supplements including Stars of Tomorrow and World of Locations.

Find out moreSite powered by Webvision Cloud