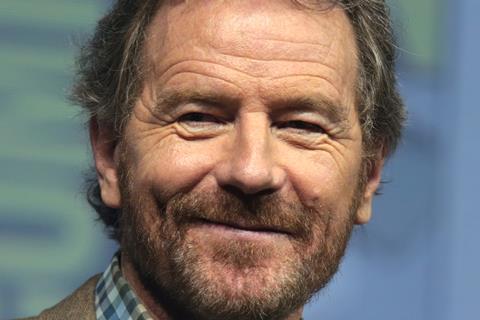

Bryan Cranston, SAG-AFTRA, and two of Hollywood’s biggest talent agencies have agreed what they describe as a “framework” with OpenAI to strengthen guardrails around the replication of performers’ voices and likenesses in the new Sora 2 text-to-video app.

The collaboration comes a few weeks after the launch of OpenAI’s Sora 2 and the appearance of a video apparently showing late pop superstar Michael Jackson interacting with Cranston’s Walter White character from the Breaking Bad TV series. Cranston’s voice and likeness were generated in some Sora 2 outputs without consent or compensation.

In a joint statement with Cranston, the US actors’ union, United Talent Agency, Creative Artists Agency and the Association of Talent Agents, OpenAI “expressed regret for these unintentional generations” and added that it has “strengthened guardrails around replication of voice and likeness when individuals do not opt-in.”

OpenAI has an opt-in policy for uses of an individual’s voice or likeness in Sora 2, with artists and other individuals having the right to determine how and whether they can be simulated. The company also says it has “committed to responding expeditiously to any complaints it may receive.”

The new framework aligns with principles embodied in the No Fakes Act, pending US federal legislation designed to protect performers and the public from unauthorised digital replication.

Commenting on the statement, Cranston, who brought the Sora 2 video to the attention of SAG-AFTRA, said: “I was deeply concerned not just for myself, but for all performers whose work and identity can be misused in this way. I am grateful to OpenAI for its policy and for improving its guardrails, and hope that they and all of the companies involved in this work, respect our personal and professional right to manage replication of our voice and likeness.”

OpenAI CEO Sam Altman added: “OpenAI is deeply committed to protecting performers from the misappropriation of their voice and likeness. We were an early supporter of the No Fakes Act when it was introduced last year, and will always stand behind the rights of performers.”

![[L-R]: Amanda Villavieja, Laia Casanovas, Yasmina Praderas](https://d1nslcd7m2225b.cloudfront.net/Pictures/274x183/6/4/1/1471641_pxl_20251224_103354743_618426_crop.jpg)

No comments yet