Squyres talked with ScreenTech about his work on Life of Pi and the challenges of editing a movie where one of your main characters is a digitally created tiger.

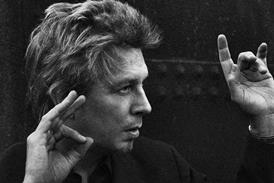

Editor Tim Squyres has been a long-time collaborator with director Ang Lee, having editing all but one of the director’s films. Squyres talked with ScreenTech about his work on Life of Pi and the challenges of editing a movie where one of your main characters is a digitally created tiger. His work on Life of Pi has earned Squyres his second Oscar nomination. His first nomination was for Ang Lee’s Crouching Tiger, Hidden Dragon.

ScreenTech: This was your first 3D movie. How did you approach the 3D format?

Tim Squyres: Right from the beginning, when we were deciding whether or not to do the film in 3D, Ang Lee and I and some other people went and saw a lot of 3D movies – good ones and bad ones. When we decided to do it, we decided we wouldn’t do what a lot of other productions have done, which was cut in 2D and only use 3D to screen.

We decided we would cut in 3D, so right from the first day of dailies all the way to the end of the film, I did everything in 3D. We never worked in 2D at all. And the reason was that neither of us had ever done 3D before and we didn’t want to be thinking when we were cutting, “Well, how is this going to look in 3D? Should I change the way I’m doing things because of how the 3D might look?” We figured if we just worked in 3D, we wouldn’t have to intellectualise that. We could just respond to what we were seeing.

ST: How did it change what you did in the cutting room?

TS: During production, I worked on a 45 inch JVC plasma screen. We shot in Taiwan, where we also had our digital lab and a very good screening room with a Christie RealD projector. After we finished shooting, we brought that projector back to New York and installed it in our editing room, which was built to accommodate it, with an 11.5 foot silver screen and a big sound system. So my primary edit monitor was a big screen.

We could sit in the editing room and work on what felt like a movie and we had a couple big, comfortable chairs and could actually sit in what was actually a very good small screening room and watch the movie. Being able to try something and then experience it the way an audience would experience it, seeing it on a big screen, really makes a difference. For a 3D film it’s more important to get that sense than in a 2D film.

ST: Was it a different experience editing on a screen that large?

TS: When you’re editing, you’re looking at the image on your screen and trying to imagine what it’s going to be like in a movie theatre. I have a lot of experience doing that in 2D, but I didn’t have experience doing that in 3D. And because we could afford it on this film, we decided we would just cut on a big screen. I was concerned that it would be disorienting having to switch focus so far back, but it’s fine.

The biggest annoyance is you have to keep the light levels in the room low, which makes it a little harder to see the keyboard, but beyond that, no problem cutting on big screen. It was great., but if I was doing sound work or some of the more technical things without Ang in the room, I would switch to the plasma screen, just to save electricity. Whenever he was there, we worked on the big screen.

ST: How did you handle the technical aspects of the 3D? Was there a dedicated stereographer on the film?

TS: There was a stereographer onset during the production. I was the post-production stereographer and it turns out you have to make a lot of adjustments in post, just to make the movie watchable, to get things to flow from shot to shot. Probably about half the shots in the film I adjusted convergence on. It’s great to be able to try different things with the convergence.

There are a couple big convergence moves that we do. For example, there’s a shot where the sailors put Pi on the lifeboat and the lifeboat detaches and free-falls down to the water. During that shot, in order to accentuate that drop, I pushed convergence back as the boat starts falling, so it feels like that shot really opens up. It gives it a sense of falling farther than it really did.

ST: Do you find that with a VFX-heavy film like Life of Pi, so many things are predetermined you have fewer choices as an editor?

TS: Yes. I was actually very concerned going into this film that I wouldn’t have much to do. We knew we had to go into shooting it with a plan and that we couldn’t go into every scene and shoot a lot of coverage, which I enjoy. We knew we wouldn’t have that luxury on this film. We did a previs of over an hour’s worth of the film. My fear was they would shoot the previs and hand it to me and I would slap it together and go home. It turned out that was not the case – or it was only somewhat the case.

I certainly had less coverage than I usually have. When I work with Ang, I try a whole bunch of things and I send him several versions. But in this film there weren’t many different ways of cutting it, in terms of structure. In terms of picking takes, yes, it was all as usual but what was really interesting is that usually I’m limited to the performances they shoot on set. In this film, one of our main characters, the tiger, wasn’t created yet, so I wasn’t limited to a performance. Ang and I could reblock the scene and adjust the tiger’s performance accordingly in post.

ST: How did you go about assembling the scenes with the digital animals?

TS: I didn’t want to be cutting from a shot of our actor to a shot of an empty boat to a shot of our actor back to the empty boat. So we had the previs team strip out the tiger animation they had done and give it to us on green, so we could key it and track it into our own shots. Generally, when I assembled the scene I would assemble it with just the plates, then I would give it to my staff and very quickly, for all these shots, I would have the animals in and backgrounds in too - we had a big library of skies and oceans we could use.

So by the time we screened the assembly, every shot had the animals in it - and backgrounds too so we weren’t looking at the walls of the wave tank. You could sit and watch it like a movie and not have to be imagining any of the elements. That was really important and goes back to something we did in Hulk. You can do a lot of very good comping in the Avid.

With Hulk, I had all these 3D models of Hulk and airplanes and everything, and I blocked that entire movie so you could watch it and see what was going on right from the beginning. On Hulk I would generally keep all the work that went into them in my sequence. On Life of Pi if I had done that, each of my sequences would have been 200 megabytes, so my assistants would do it and give me mix downs.

ST: Can you talk a bit more about your workflow? You were using Avid Media Composer.

TS: Media Composer 6 has all kinds of fantastic 3D support built into it but unfortunately Media Composer 6 didn’t come out until several months after we started shooting. The data structure in Media Composer 6 is very different from 5. You have to build 3D clips differently. So once you’ve started a project on 5, you can’t move up to 6. My assistant Mike Fey and I had been beta testing Media Composer 6, but we couldn’t use it on Life of Pi.

So there were all kinds of tricks and procedures we came up with to be able to work in 3D with side-by-side media. When we were shooting we worked at a very high resolution, using DNx 115, because with stereo any discrepancies between the two eyes are annoying and so we wanted to minimise compression artifacts. I had worked in DNx resolutions before but using DNx 36. So we brought that in side-by-side and for each shot we also had a left eye discrete and a right eye discrete that we used for a lot of the compositing that we did.

For every shot we used three times as much space, because we had three times the footage – left eye, right eye and side by side – and then the DNx 115 is three times as much storage as DNx 36, so we were using nine times as much storage. We started with a 48 terabyte Avid Unity and went up to around 60 terabytes.

ST: What does 3D really bring to a movie? Is it just 2D with a bit extra, or is it a completely different way of moviemaking?

TS: You’re still telling a story. It’s not like you’ve invented a new art form. When an audience goes away, what they go away with is character and story and emotion. Those don’t change. What changes, hopefully, is the sense you have of being in the movie.

In deciding whether or not we should do the movie in 3D, we thought we have many, many scenes with a kid and a lifeboat on the ocean and a tiger and that’s it. We wondered how to make that visually interesting and keep it fresh. It seemed like 3D could give us a sense of being immersed in that space and that we could have the ocean and sky be more than backgrounds, but really establish a presence.

Also, the film is an adventure story but there’s a real spiritual element to it and we felt – and a lot of people seemed to feel – that in 3D you get that feeling of being in that space and what it means to him.

No comments yet